Your AI Sucks Because You Suck at Documentation

The gap between AI winners and whiners is growing, and it's entirely self-inflicted

I'm exhausted by the endless stream of AI complaints flooding my feed from anti-ai edge-lords. Every day, it's the same recycled takes from people who spent five minutes with ChatGPT or Claude, failed to build their billion-dollar app, and concluded the entire technology is worthless.

These people are fighting an invisible boogie man they've collectively created in their echo chambers. Let's talk about why they're wrong, and more importantly, what they're missing.

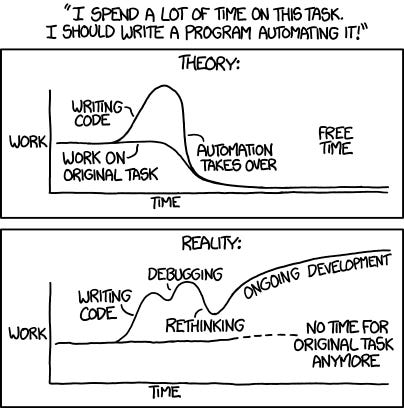

"AI can't code! I asked it to build my startup and i all i got was a steaming pile of shit!"

This is like complaining that a hammer can't build a house by itself.

I regularly use AI to generate boilerplate CRUD operations, write test suites, convert designs to Tailwind components, and refactor messy functions. Yesterday, I built an entire authentication system in 30 minutes that would've taken all day without AI.

The difference is that I know what I want before I ask for it. Be specific. "Build me a SaaS" gets you garbage. "Write a Python function that validates email addresses using regex, handles edge cases for subdomains, and returns specific error messages" gets you gold, albeit it can be improved by adding even more context.

But here's what the complainers don't understand: AI needs context, just like a human developer would.

"It hallucinates! It told me a library function that doesn't exist!"

Yes, and humans never make mistakes, right? At least AI doesn't show up hungover on Monday.

It takes 10 seconds to verify a function exists. Even when AI invents a function name, the logic often points you in the right direction. I've had Claude suggest non-existent methods that led me to discover the actual method I needed.

Here's the actual solution:

If AI keeps hallucinating something you do often, write it to your standard and put it somewhere in your project as a stub. Create comprehensive, stubbed code examples of your common patterns. When AI sees your actual code structure, it stops inventing and starts following your lead.

"It writes buggy, insecure code!"

Are you for real my guy? I’ve got some news for you! So does every junior developer and most seniors. At least AI doesn't get defensive when you point out mistakes.

AI code needs review, just like human code. The difference is AI can generate 100 variations in the time it takes a human to write one. Use it for rapid prototyping, then refine.

Pro tip: Ask AI to review its own code for vulnerabilities. Then ask again with a different approach. It catches its own mistakes surprisingly well when prompted correctly.

"It doesn't understand my project!"

Noooooo REALLLY?! You wouldn't throw a new engineer into a complex codebase and expect magic. You'd give them documentation, training, and context. AI is no different.

This is where 99% of people fail spectacularly. They treat AI like it should be omniscient instead of treating it like what it is: an incredibly capable junior developer who needs proper onboarding.

Stop Being Lazy and Set Up Your AI Properly

Here's what successful AI users do that complainers don't:

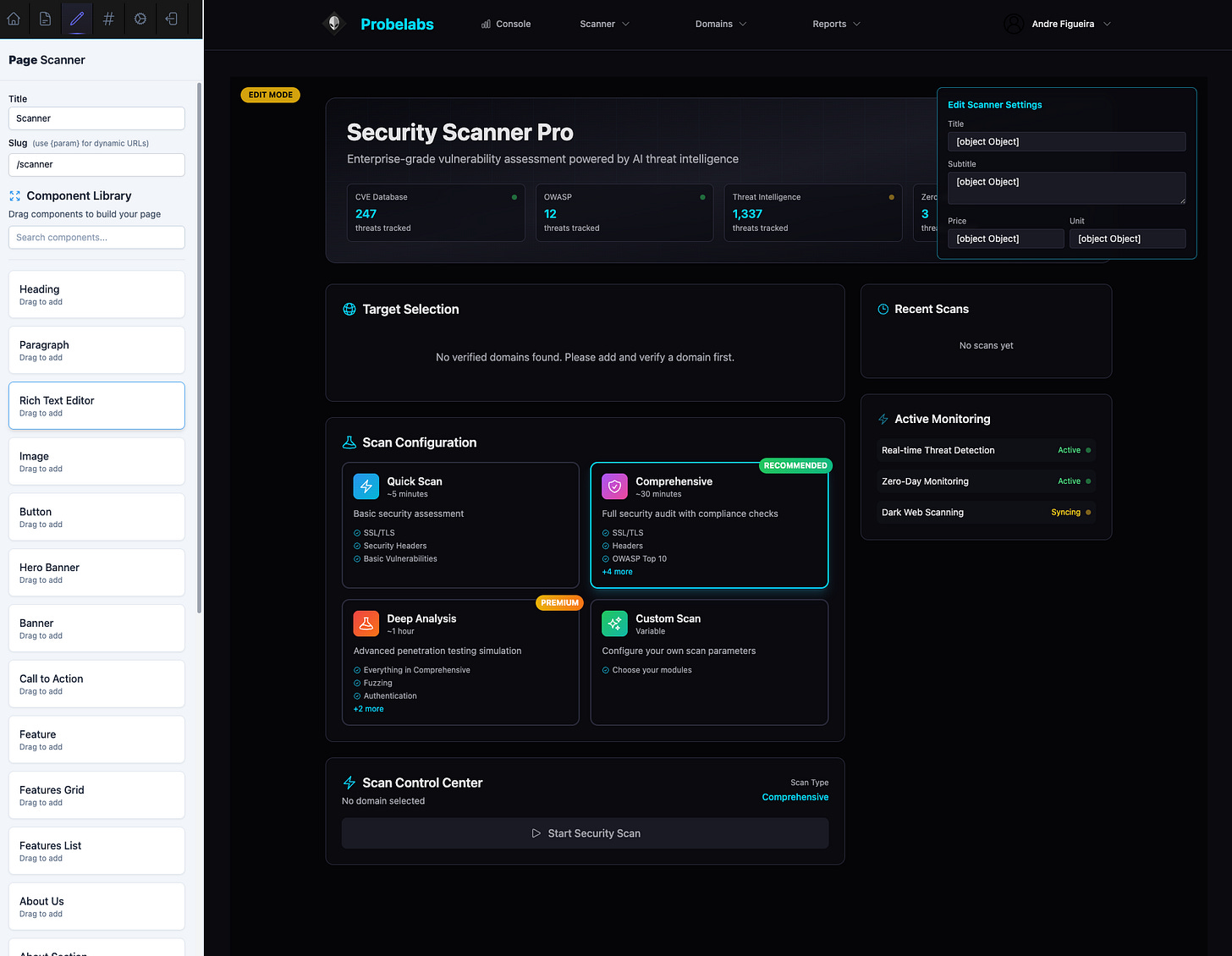

Create an agents.md file

Document how AI should interact with your project. Include:

Project structure and architecture

Coding standards and conventions

Common patterns you use

Libraries and their specific versions

Database schemas

API endpoints and their purposes

Write a proper claude.md (or equivalent)

This is your AI instruction manual. Include:

How you want code formatted

Error handling patterns

Testing requirements

Security considerations

Performance standards

Comment style

Maintain project documentation in markdown

AI reads markdown brilliantly. Keep your:

README files updated

API documentation current

Architecture decisions documented

Setup instructions clear

Every piece of documentation you write for AI makes you a better developer anyway. Funny how that works.

Use well-written, commented code

Good comments aren't just for humans anymore. When your code explains itself, AI understands your intent and maintains your patterns. Write code like you're teaching someone, because you literally are.

Create comprehensive stub examples

If you have specific ways of handling authentication, API calls, or data validation, create stub files with examples. Put them in a /stubs or /examples directory. Reference them in your agents.md. Now AI follows YOUR patterns instead of generic ones.

For instance, I have a stubs/api-handler.js that shows exactly how I want errors handled, responses formatted, and logging implemented. AI never deviates from this pattern because it has a clear example to follow.

Teach your agents how your project actually works

You wouldn't just tell an engineer at a good company "good luck." You'd give them:

Onboarding documentation

Code review standards

Example pull requests

Architecture overviews

Style guides

AI needs the same thing. The difference between "AI sucks at coding" and "AI saves me hours daily" is literally just proper documentation and context.

Real Examples from My Workflow

Last week, I needed to add a complex filtering system to an existing app. Instead of complaining that AI "doesn't get it," I:

Updated my agents.md with the current data structure

Added a stub showing how I handle similar filters elsewhere

Documented the performance requirements

Specified the exact libraries and versions we use

Result? AI generated a complete filtering system that followed our patterns perfectly. Two hours of setup documentation saved me two days of coding.

Another example: My team was tired of AI suggesting deprecated Vue patterns. Solution was to create a vue-standards.md file with our current practices, hooks we prefer, and state management patterns. Now every AI suggestion follows our modern Vue standards.

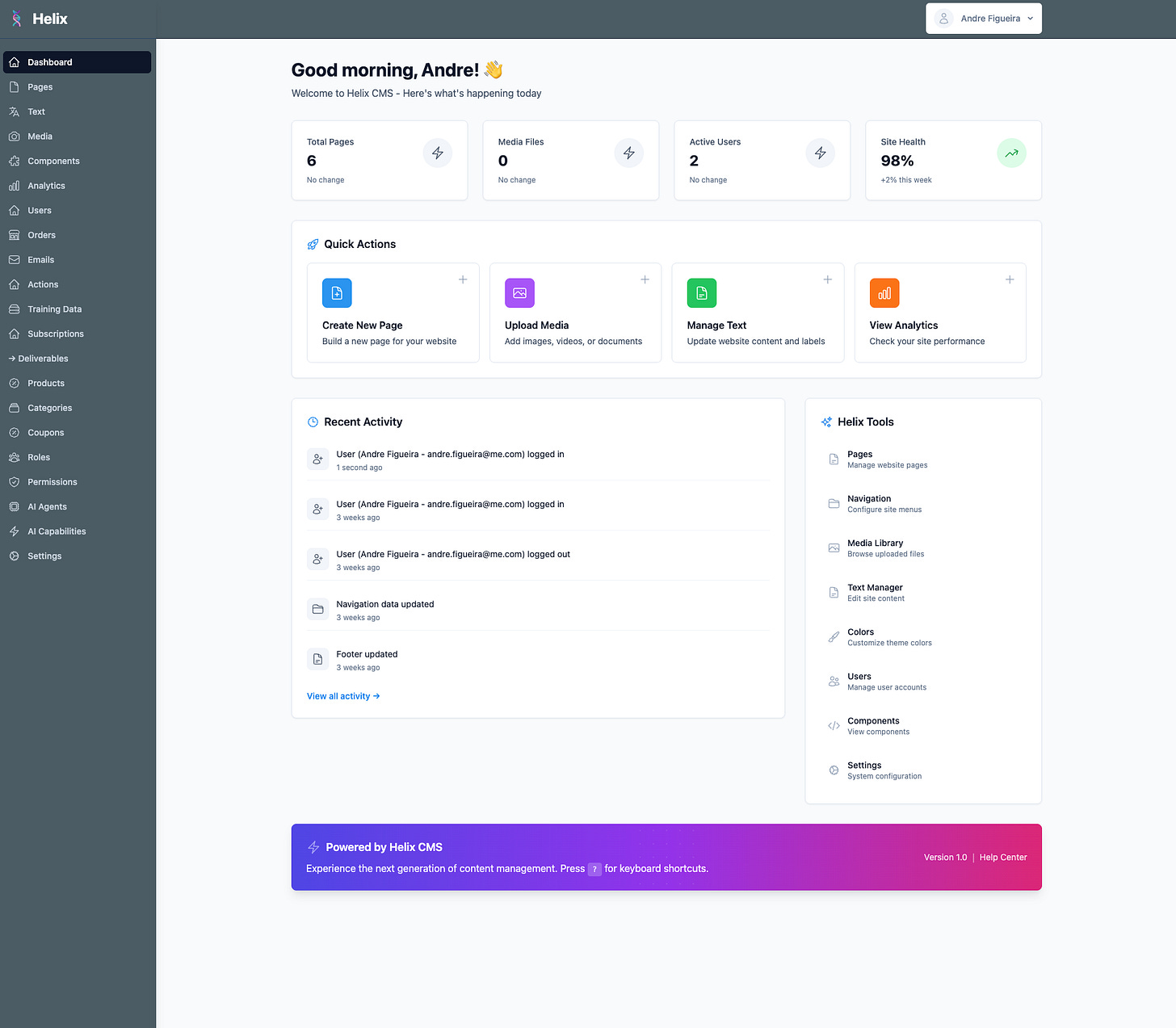

A case study: My CMS Built at 10x Speed

I built a complete CMS powered by Laravel and Vue.js, and here's the kicker: AI writes 90% of my components now. Not garbage components. Production-ready, following-my-exact-patterns components.

How? I did the work upfront.

I wrote the initial components myself. When I noticed patterns repeating, I turned them into stubs. HTML structures, CSS patterns, Laravel code conventions, JavaScript style preferences. All documented, all stubbed, all referenceable.

The real power comes from my dynamic component system. I created templates showing exactly how components should:

Handle props and state

Manage API calls

Structure their templates

Handle errors

Emit events to parent components

Follow my specific naming conventions

Now when I need a new data table component, AI generates it perfectly most of the time, following my exact patterns. Need a form with complex validation? AI knows exactly how I want it structured because I showed it with examples. Want a dashboard widget? AI follows my stub patterns and creates something indistinguishable from what I would write myself, you get the idea…

Thanks to this setup, I can build huge projects in a fraction of the time. What used to take me weeks now takes days. And the code quality is excellent. Because AI isn't guessing. It's following my documented, stubbed, proven patterns.

The complainers would look at this and say "but you had to write all those stubs!" Yeah, I spent maybe two days creating comprehensive stubs and documentation. Those two days now save me two weeks on every project. But sure, keep complaining that AI "doesn't work" while I'm shipping entire CMS systems in the time it takes you to argue on LinkedIn.

The Whiners vs. The Winners

The Whiners:

Try once, fail, give up

Never document anything

Expect AI to read their minds

Complain about hallucinations instead of preventing them

Think context is optional

Treat AI like magic instead of a tool

The Winners:

Build comprehensive documentation

Create reusable stubs and examples

Iterate on their prompts

Maintain proper project context

Update their AI instructions as projects evolve

Save hours every single day

I've watched junior developers build in a weekend what would've taken months. But you know what? They all had proper documentation and context set up first.

Stop Making Excuses

Every time someone posts "AI can't code," what they're really saying is "I can't be bothered to set up proper documentation and context."

Every "it hallucinates" complaint translates to "I never created examples of what I actually want."

Every "it doesn't understand my project" means "I expected it to be psychic rather than spending 30 minutes writing documentation."

The tools are there. The patterns work. The productivity gains are real. But they require effort upfront, just like training a human developer would.

The Predictable Meltdown When You Call Them Out

Here's what happens every single time you point out these flaws to the AI complainers. Instead of engaging with the substance, they immediately resort to:

"You're just caught up in the hype!"

Ah yes, the hype of... checks notes... shipping working products faster. The hype of comprehensive test coverage. The hype of documentation that actually exists. What a terrible bandwagon to jump on.

"You're not a real developer if you need AI!"

This from people who copy-paste from Stack Overflow without understanding what the code does. At least when I use AI, I review, understand, and modify the output. But sure, tell me more about "real" development while you're still manually writing getters and setters in 2025.

"It's just making developers lazy!"

Lazy? I spent days creating comprehensive documentation, stubs, and context files. I maintain multiple markdown files explaining my architecture. I review and refine every piece of generated code. Meanwhile, you can't even be bothered to write a README. Who's lazy here?

"You clearly don't understand software engineering!"

This one's my favourite. It usually comes from someone who hasn't updated their workflow since 2015. Yes, I clearly don't understand software engineering, which is why I'm shipping production apps in a fraction of the time with better documentation and test coverage than you've ever achieved.

"AI code is garbage for serious projects!"

They say this while their "serious" project has no documentation, inconsistent patterns, and that one file everyone's afraid to touch because nobody knows what it does. My AI-assisted code follows consistent patterns because I defined them. Your hand-written code is spaghetti because you never bothered to establish standards.

The Hand-Wavy Dismissals

Instead of addressing how proper documentation and stubs solve their complaints, they pivot to vague philosophical concerns about "the future of programming" or "what it means to be a developer."

They'll throw around terms like "technical debt" without explaining how properly documented, consistently patterned, well-tested code creates more debt than their undocumented mess.

They'll say "it doesn't scale" while I'm literally scaling applications with it.

They'll claim "it's not enterprise-ready" from their startup that can't ship a feature in under three months.

The Truth They Can't Handle

When you strip away all their deflections and insults, what's left? Fear. Fear that they've fallen behind. Fear that their resistance to change is showing. Fear that while they were writing think-pieces about why AI is overhyped, others were learning to leverage it and are now outpacing them dramatically.

It's easier to insult someone's intelligence than admit you're wrong. It's easier to call something "hype" than acknowledge you don't understand it. It's easier to gatekeep "real development" than accept that the field is evolving past your comfort zone.

But here's the thing… their ad hominem attacks don't make my deployment pipeline any slower. Their insults don't reduce my code quality. Their hand-waving doesn't change the fact that I'm shipping faster, better, and with more confidence than ever before.

In the end…

The gap between people leveraging AI and those dismissing it grows exponentially every day. It's entirely about mindset and effort.

Any intelligent person with an ounce of humility knows AI is incredibly powerful IF you approach it right. That means:

Writing documentation (which you should do anyway)

Creating examples (which help humans too)

Maintaining standards (which improve your codebase)

Providing context (which aids collaboration)

Your sloppy, undocumented project isn't AI's fault. Your lack of coding standards isn't AI's limitation. Your refusal to create proper stubs and examples isn't AI "hallucinating."

It's you being lazy.

The future belongs to those who adapt. And adaptation means treating AI like the powerful tool it is, rather than expecting magic from a system you refuse to properly configure.

If you still think AI is useless after reading this? Cool. I'll be shipping products at 10x speed with my properly documented, context-rich, AI-assisted workflow while you're still typing complaints about how it "doesn't work."

The only difference between us is that I spent a day setting up my AI properly, You spent a day complaining on LinkedIn.

Guess which one of us is more productive.

Want to Stop Whining and Start Winning?

Look, I get it. Setting up AI properly takes knowledge and effort. Not everyone has time to figure this out from scratch. That's where I come in.

At PolyxMedia, we build AI-powered solutions and we teach you how to leverage AI like we do.

What we offer:

AI Integration & Automation

We'll set up your entire AI workflow. The agents.md, the claude.md, the stubs, the documentation. Everything I described in this article, tailored to your specific tech stack and workflow. Stop fighting the tools and start shipping 10x faster.

Bespoke Software Development

Need a CMS like mine? A custom platform? An AI-enhanced application? We build it using the exact methodologies I've outlined here. Fast, documented, maintainable, and AI-ready from day one.

AI Training & Workshops

Your team doesn't know where to start? We'll bring them up to speed. Not theoretical nonsense, practical, hands-on training showing exactly how to integrate AI into their daily workflow. We'll turn your AI skeptics into AI power users.

Consultancy Services

Sometimes you just need someone to audit your current setup and show you what's possible. We'll review your workflow, identify AI opportunities, and create a roadmap for implementation. No fluff, just actionable insights.

The difference between companies thriving with AI and those falling behind isn't the technology, it's the implementation. We bridge that gap.

Ready to stop complaining and start competing? Let's talk.

Get in touch at PolyxMedia — We'll show you what AI can actually do when you stop fighting it and start using it properly.

P.S. — Still skeptical? That's fine. Keep doing things the old way. Your competitors using our AI workflows will appreciate the head start.