How Non‑Experts Took Over the AI Conversation (and Why It’s a Problem)

How non-experts hijack complex tech conversations—and why we need to stop mistaking confidence for competence.

It’s wild how often the loudest voices in tech conversations aren’t actually from people who build anything. Doesn’t matter if it’s AI, crypto, Web3, or whatever the next acronym will be—every time something new explodes, you get a wave of people confidently telling the world how it works… while having no technical background at all.

Case in point: a guy I’ll call Bob. Bob’s a marketer. Smart, articulate, definitely good at writing posts that make people engage. But during a recent thread on LinkedIn, he went full philosopher king on LLMs and hallucinations—dropping hot takes like “scaling models doesn’t improve their reliability” and “they fake their own test scores.”

Now, I work with this tech daily. I’ve implemented LLMs into production systems, write code that uses them in workflows, and see both the strengths and the edge cases first-hand. So I stepped in—not to start drama, just to correct a few misconceptions.

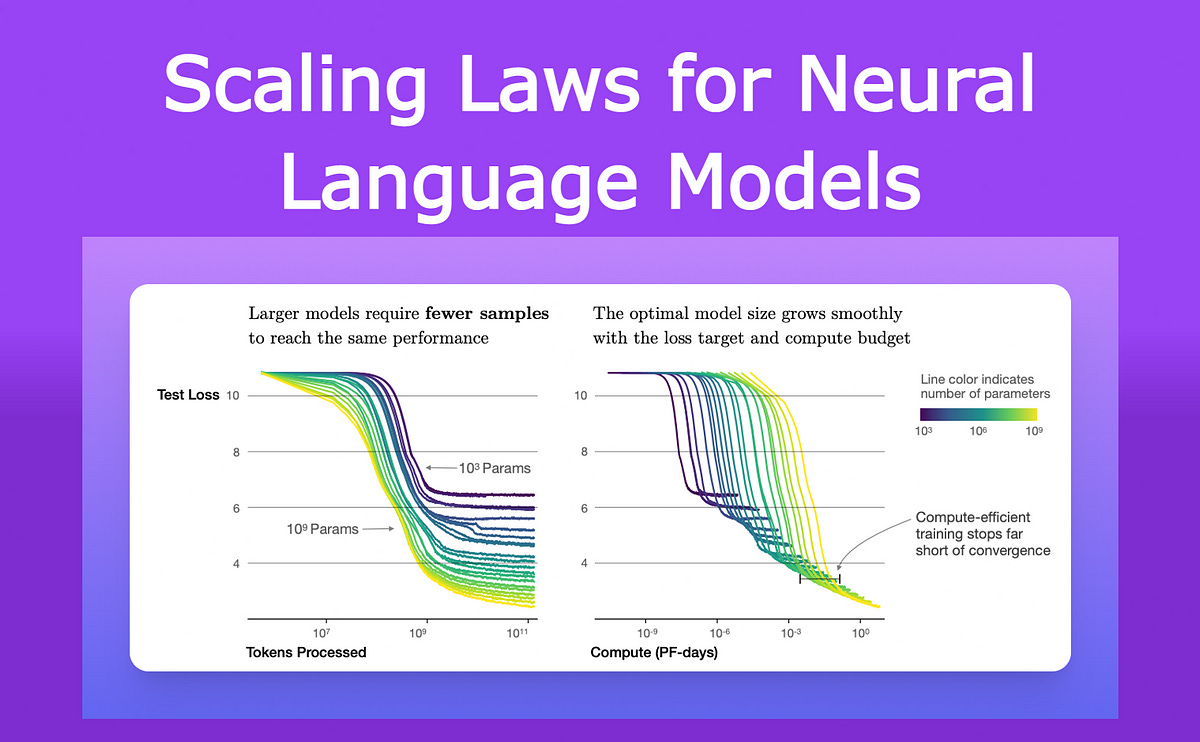

I pointed out that hallucinations come from lack of grounding, that scaling does improve performance (this isn’t theory, it’s shown in papers from OpenAI, DeepMind, Anthropic, etc.), and that like any other tool, AI works best when used by people who know what they’re doing.

What happened next was textbook deflection. Bob didn’t respond to the substance. He pivoted straight into character critique:

“That’s a typical engineer answer.”

“You’re not concerned enough about risk tolerance.”

“You’re using your technical knowledge to mask blind spots.”

And eventually:

“You didn’t understand the purpose of my post—it was a polemic.”

Right. Because nothing says intellectual honesty like declaring after the fact that your post was performance art and anyone who responded seriously just didn’t get it.

One of Bob’s recurring jabs was that I didn’t seem “concerned enough about risk.” That I was too casual about failure modes and didn’t factor in the consequences of LLMs making mistakes in high-stakes domains like legal, finance, or customer support.

Which is rich—because the people who actually build and deploy these system think about risk constantly. It’s baked into everything: architecture decisions, fallback logic, testing protocols, red teaming, rate limits, logging, incident response. You don’t ship anything serious without thinking about how it breaks, and what happens when it does.

What Bob actually meant was: you’re not scared enough to validate my point. See, if you’re not visibly panicking about LLMs hallucinating, then to someone outside the field, that must mean you’re “blinded by hype.” But in reality, the opposite is true: the people with the highest risk tolerance are usually the ones who understand the system the best—because they know where the edges are and how to guardrail them.

Fear doesn’t make you safer. Competence does. Knowing the risks and building for them beats loud warnings from someone who’s never had to roll back a production deployment in their life.

This is the trick: when someone without deep domain knowledge gets challenged, they don’t debate the facts. They attack the person. In this case, a marketer trying to frame himself as the only one who sees the “bigger picture,” while accusing me—someone who actually works with AI—of being blind to nuance. Classic status game.

But here’s the real issue: this kind of dynamic is everywhere right now. AI is hot, people are scared, and most folks don’t know enough to separate signal from noise. So when someone speaks with authority—especially in a confident, well-written way—they get believed. Even if what they’re saying is flat-out wrong.

The platforms don’t help. LinkedIn, Twitter, all of them—they reward emotion, not accuracy. Nuance doesn’t go viral. But nonsense with strong delivery gets pushed because it illicit people to come and correct the poster of the click bait.

That’s how you end up with marketers and recruiters trying to lecture engineers about how LLMs work. And when you correct them with facts, suddenly you’re “triggered” or “arrogant” or “blind to the real point.”

Here’s the thing, I’m not trying to win points in some debate club. I just don’t want people being misled by someone who sounds smart but is actually completely full of shit.

Especially when they’re talking about a field that impacts real systems, real users, and real consequences.

If you’re not sure who to trust in all the AI noise, here's a simple filter:

Look at who’s shipping.

Who’s working with models in production.

Who actually gets paged when shit breaks.

Listen to those who do, not those who talk.

Those are the voices that matter—because they’ve got skin in the game, and have actually studied the subject they’re talking about rather than formed a surface level opinion rife with assumption rather than fact.

As for the Bobs of the world? Let them talk. Just don’t mistake volume for value.

You wouldn’t ask a farmer to perform your surgery—so why take technical advice from someone in marketing pretending they understand how AI works?